Artificial intelligence (AI) has been applied in a wide range of applications. On one hand, AI is a powerful tool to identify and respond to potential cyber threats based on the learned patterns; On the other hand, with great power comes great vulnerability, AI is vulnerable to multiple adversarial attacks.

With the AI techniques, our research focuses on investigating potential vulnerablities of ASR systems. By leveraging the physical properties and time-frequency features of audio, we figure out defense schemes against various malicious audio attacks.

To secure AI, we provide a generic defense system that can capture sophisticated localized partial attacks.

AI for security: Modulated Replay Attack SIEVE system

Security of AI: TaintRadar

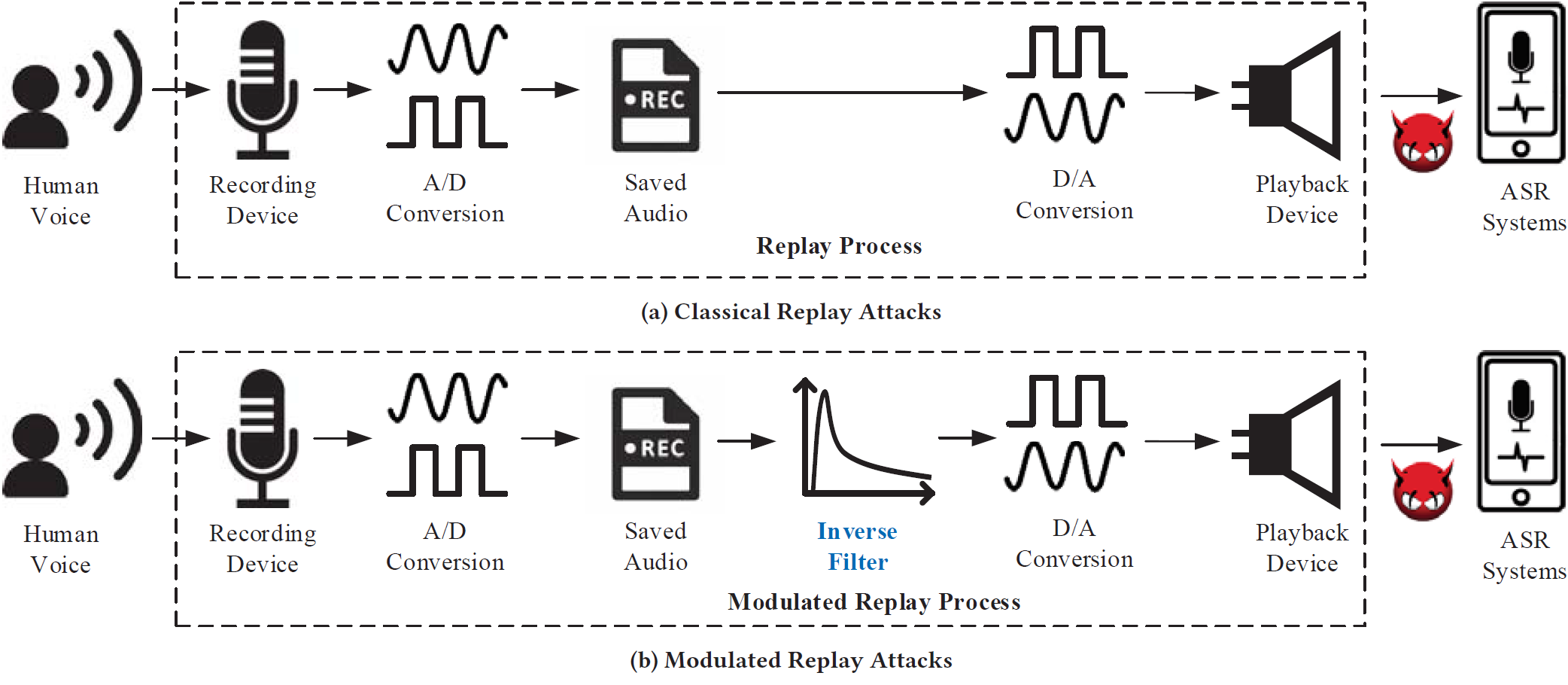

Automatic speech recognition (ASR) systems have been widely deployed in modern smart devices to provide convenient and diverse voice-controlled services. Since ASR systems are vulnerable to audio replay attacks that can spoof and mislead ASR systems, a number of defense systems have been proposed to identify replayed audio signals based on the speakers’ unique acoustic features in the frequency domain. In our paper, we uncover a new type of replay attack called modulated replay attack, which can bypass the existing frequency domain based defense systems.

The basic idea is to compensate for the frequency distortion of a given electronic speaker using an inverse filter that is customized to the speaker’s transform characteristics. Our experiments on real smart devices confirm the modulated replay attacks can successfully escape the existing detection mechanisms that rely on identifying suspicious features in the frequency domain.

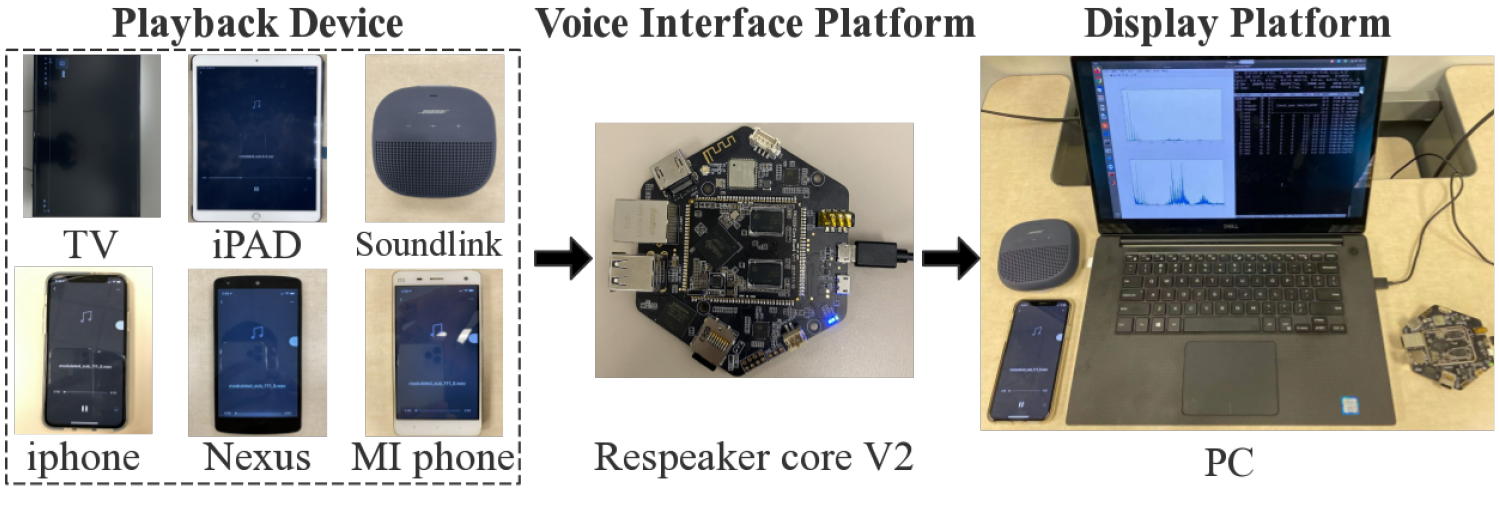

To defeat modulated replay attacks, we design and implement a countermeasure named DualGuard. We discover and formally prove that no matter how the replay audio signals could be modulated, the replay attacks will either leave ringing artifacts in the time domain or cause spectrum distortion in the frequency domain. Therefore, by jointly checking suspicious features in both frequency and time domains, DualGuard can successfully detect various replay attacks including the modulated replay attacks. We implement a prototype of DualGuard on a popular voice interactive platform, ReSpeaker Core v2. The experimental results show DualGuard can achieve 98% accuracy on detecting modulate replay attacks.

Published in the ACM Conference on Computer and Communications Security (CCS) 2020.

Download the Paper Slides Poster Code Export Citation

@inproceedings{wang2020modreplay,

author = {Wang, Shu and Cao, Jiahao and He, Xu and Sun, Kun and Li, Qi},

title = {When the Differences in Frequency Domain Are Compensated: Understanding and Defeating Modulated Replay Attacks on Automatic Speech Recognition},

year = {2020},

isbn = {9781450370899},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3372297.3417254},

doi = {10.1145/3372297.3417254},

booktitle = {Proceedings of the 2020 ACM SIGSAC Conference on Computer and Communications Security},

pages = {1103–1119},

numpages = {17},

keywords = {ringing artifacts, automatic speech recognition, modulated replay attack, frequency distortion},

location = {Virtual Event, USA},

series = {CCS '20}

}

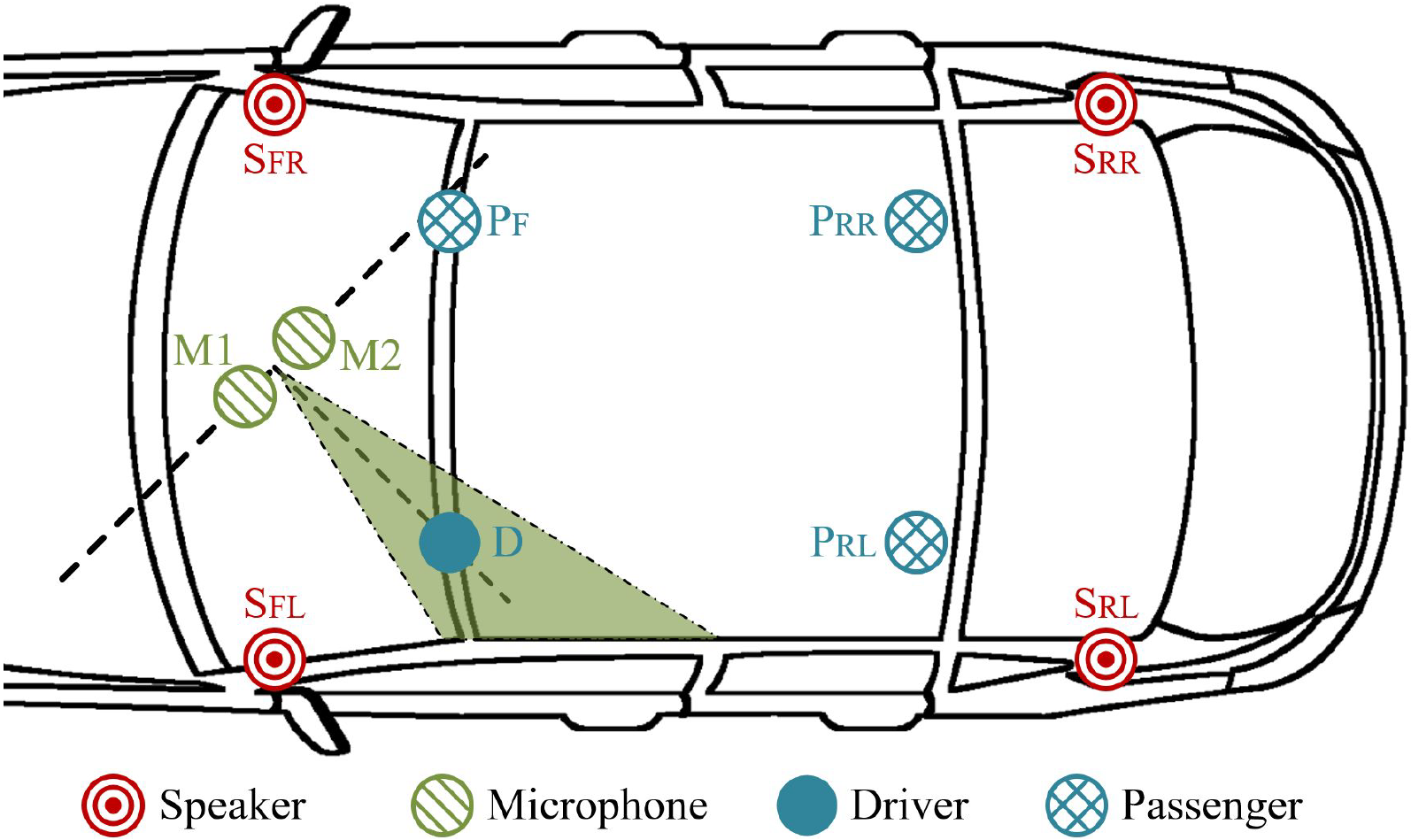

Driverless vehicles are becoming an irreversible trend in our daily lives, and humans can interact with cars through invehicle voice control systems. However, the automatic speech recognition (ASR) module in the voice control systems is vulnerable to adversarial voice commands, which may cause unexpected behaviors or even accidents in driverless cars. Due to the high demand on security insurance, it remains as a challenge to defend in-vehicle ASR systems against adversarial voice commands from various sources in a noisy driving environment.

In our paper, we develop a secure in-vehicle ASR system called SIEVE, which can effectively distinguish voice commands issued from the driver, passengers, or electronic speakers in three steps. First, it filters out multiple-source voice commands from multiple vehicle speakers by leveraging an autocorrelation analysis. Second, it identifies if a single-source voice command is from humans or electronic speakers using a novel dual-domain detection method. Finally, it leverages the directions of voice sources to distinguish the voice of the driver from those of the passengers. We implement a prototype of SIEVE and perform a real-world study under different driving conditions. Experimental results show SIEVE can defeat various adversarial voice commands over in-vehicle ASR systems.

Published in the 23rd International Symposium on Research in Attacks, Intrusions and Defenses (RAID) 2020.

Download the Paper Slides Code Export Citation

@inproceedings {wang2020sieve,

author = {Shu Wang and Jiahao Cao and Kun Sun and Qi Li},

title = {{SIEVE}: Secure In-Vehicle Automatic Speech Recognition Systems},

booktitle = {23rd International Symposium on Research in Attacks, Intrusions and Defenses ({RAID} 2020)},

year = {2020},

isbn = {978-1-939133-18-2},

address = {San Sebastian},

pages = {365--379},

url = {https://www.usenix.org/conference/raid2020/presentation/wang-shu},

publisher = {{USENIX} Association},

month = oct,

}

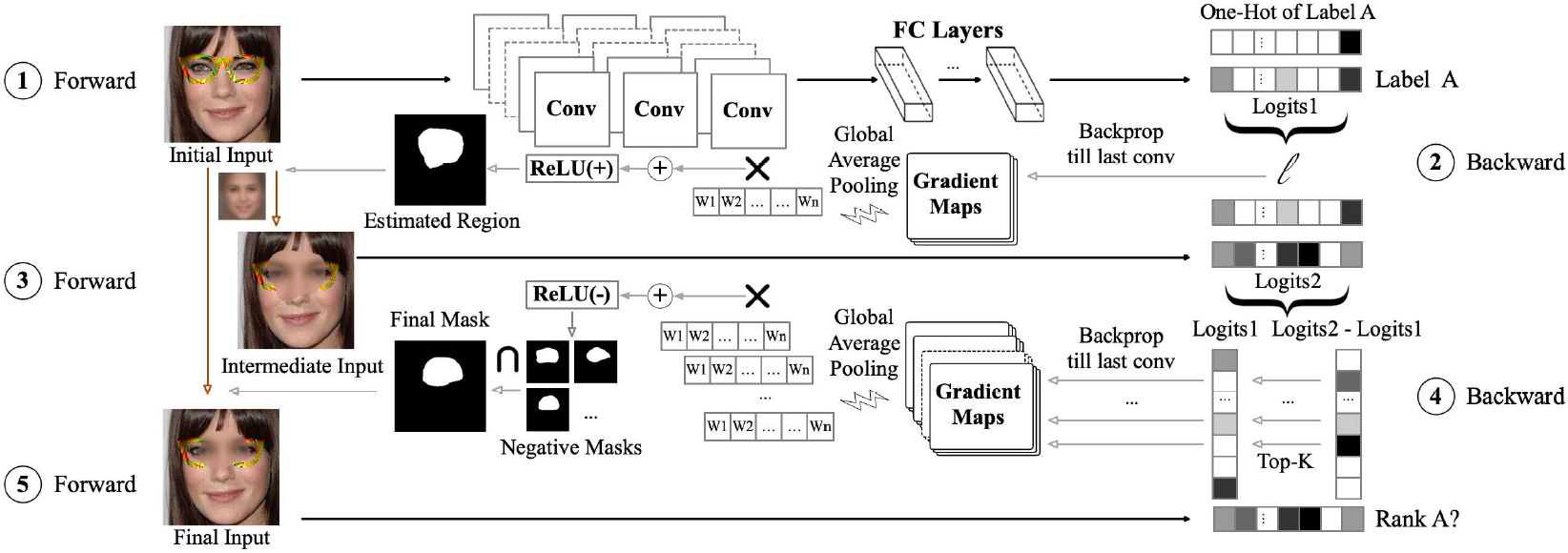

Deep neural networks (DNNs) have been applied in a wide range of applications, e.g., face recognition and image classification; however, they are vulnerable to adversarial examples. By adding a small amount of imperceptible perturbations, an attacker can easily manipulate the outputs of a DNN. Particularly, the localized adversarial examples only perturb a small and contiguous region of the target object, so that they are robust and effective in both digital and physical worlds. Although the localized adversarial examples have more severe real-world impacts than traditional pixel attacks, they have not been well addressed in the literature. In this paper, we propose a generic defense system called TaintRadar to accurately detect localized adversarial examples via analyzing critical regions that have been manipulated by attackers. The main idea is that when removing critical regions from input images, the ranking changes of adversarial labels will be larger than those of benign labels. Compared with existing defense solutions, TaintRadar can effectively capture sophisticated localized partial attacks, e.g., the eye-glasses attack, while not requiring additional training or fine-tuning of the original model’s structure. Comprehensive experiments have been conducted in both digital and physical worlds to verify the effectiveness and robustness of our defense.

Workflow of TaintRadar.

Published in IEEE International Conference on Computer Communications (INFOCOM) 2021.

Download the Paper Export Citation

@misc{li2021detecting,

title={Detecting Localized Adversarial Examples: A Generic Approach using Critical Region Analysis},

author={Fengting Li and Xuankai Liu and Xiaoli Zhang and Qi Li and Kun Sun and Kang Li},

year={2021},

eprint={2102.05241},

archivePrefix={arXiv},

primaryClass={cs.CV}

}